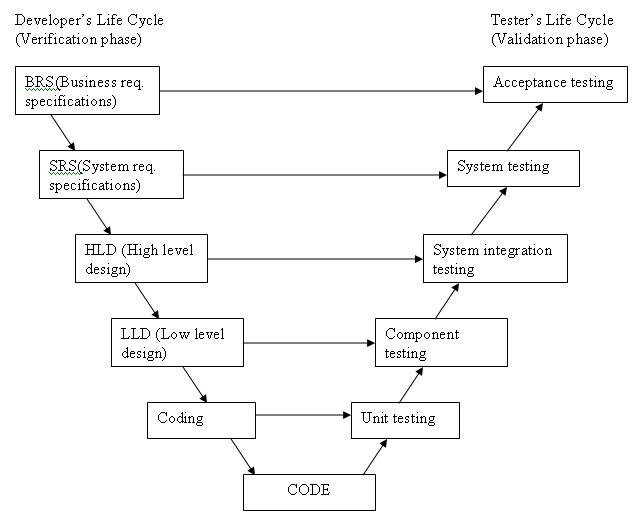

This section describes the different types of testing that may be used to test a software during SDLC.

Testers use test plans, test cases, or test scenarios to test a software to ensure the completeness of testing. Manual testing also includes exploratory testing, as testers explore the software to identify errors in it.

Apart from regression testing, automation testing is also used to test the application from load, performance, and stress point of view. It increases the test coverage, improves accuracy, and saves time and money in comparison to manual testing.

Furthermore, all GUI items, connections with databases, field validations, etc. can be efficiently tested by automating the manual process.

Manual Testing

Manual testing includes testing a software manually, i.e., without using any automated tool or any script. In this type, the tester takes over the role of an end-user and tests the software to identify any unexpected behavior or bug. There are different stages for manual testing such as unit testing, integration testing, system testing, and user acceptance testing.Testers use test plans, test cases, or test scenarios to test a software to ensure the completeness of testing. Manual testing also includes exploratory testing, as testers explore the software to identify errors in it.

Automation Testing

Automation testing, which is also known as Test Automation, is when the tester writes scripts and uses another software to test the product. This process involves automation of a manual process. Automation Testing is used to re-run the test scenarios that were performed manually, quickly, and repeatedly.

Apart from regression testing, automation testing is also used to test the application from load, performance, and stress point of view. It increases the test coverage, improves accuracy, and saves time and money in comparison to manual testing.

What is Automate?

It is not possible to automate everything in a software. The areas at which a user can make transactions such as the login form or registration forms, any area where large number of users can access the software simultaneously should be automated.Furthermore, all GUI items, connections with databases, field validations, etc. can be efficiently tested by automating the manual process.

When to Automate?

Test Automation should be used by considering the following aspects of a software:- Large and critical projects

- Projects that require testing the same areas frequently

- Requirements not changing frequently

- Accessing the application for load and performance with many virtual users

- Stable software with respect to manual testing

- Availability of time

How to Automate?

Automation is done by using a supportive computer language like VB scripting and an automated software application. There are many tools available that can be used to write automation scripts. Before mentioning the tools, let us identify the process that can be used to automate the testing process:- Identifying areas within a software for automation

- Selection of appropriate tool for test automation

- Writing test scripts

- Development of test suits

- Execution of scripts

- Create result reports

- Identify any potential bug or performance issues

Software Testing Tools

The following tools can be used for automation testing:- HP Quick Test Professional

- Selenium

- IBM Rational Functional Tester

- SilkTest

- TestComplete

- Testing Anywhere

- WinRunner

- LaodRunner

- Visual Studio Test Professional

- WATIR